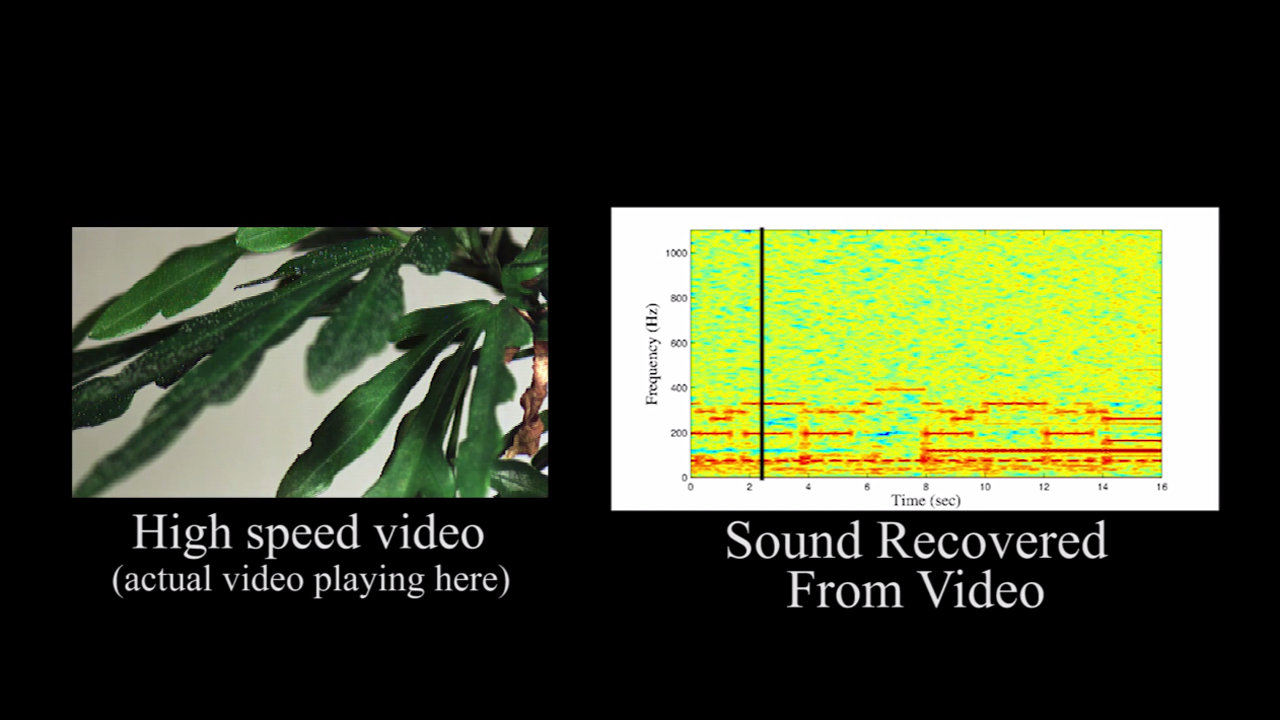

Using an algorithmic technique, researchers at MIT can now reconstruct audio that makes objects vibrate just by “reading” the video of those objects vibrating. The data — like this a houseplant’s “minute” movements generated by someone playing “Mary Has a Little Lamb” at it — can be gathered and translated into audio signals, which then can be played back. Behold, muffled, creepy “Mary Little Lamb,” via houseplant.

It gets cooler:

The method can be used to extract intelligible speech from video of a bag of potato chips filmed from 15 feet away through soundproof glass.

In other experiments, they extracted useful audio signals from videos of aluminum foil, the surface of a glass of water, and even the leaves of a potted plant. The researchers will present their findings in a paper at this year’s Siggraph, the premier computer graphics conference.

Holy shit.