ANIMAL’s Radicals Of Retrofuturism uncovers stories by the technological rebels of the past in vintage media and looks at their predictions in the context of today’s digital world. This week, we take a look at Synapse magazine’s 1978 interview with David Rosenboom, as well as neurological feedback in early electronic music and contemporary science.

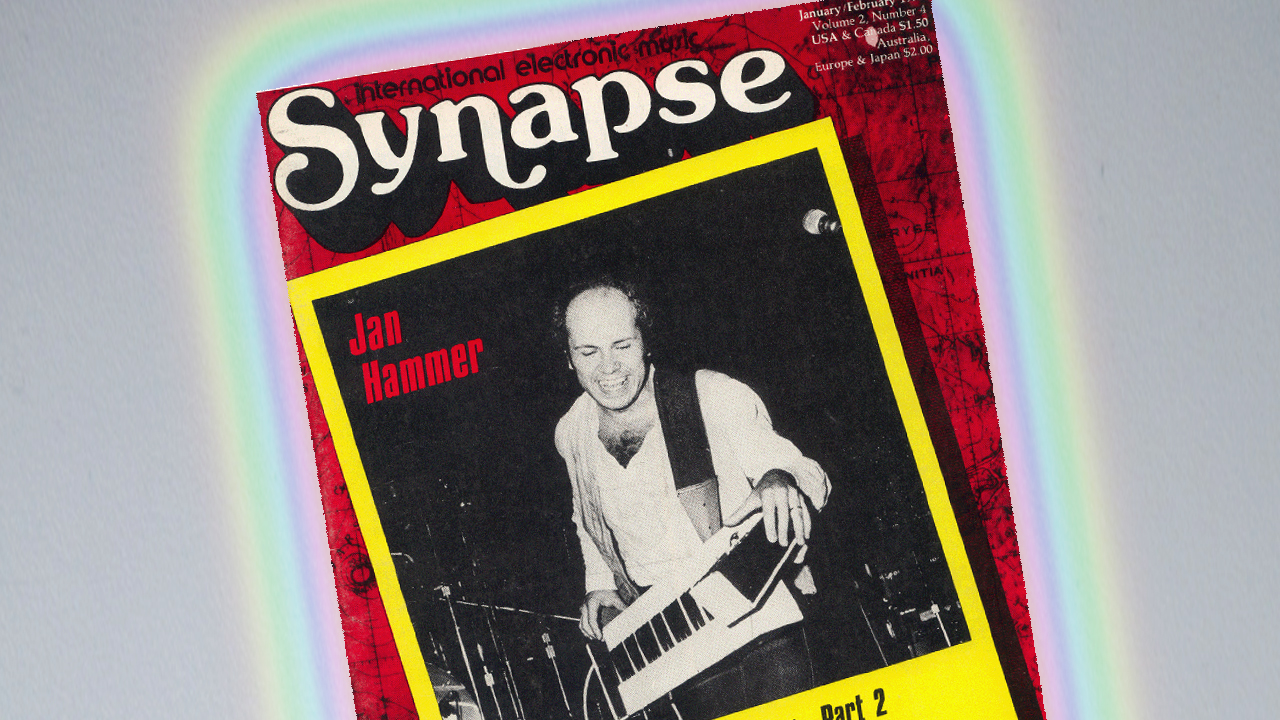

Synapse, a bi-monthly American magazine published from March 1976 to June 1979, took electronic music very seriously. That makes sense: At the time, electronic music was the passion of a dedicated few, far from being fused with every pop song heard by millions as it is today. The magazine featured early interviews with Robert Moog, DEVO and many other pioneers of electronic music of the late ’70s. Their issues were heavy on interviews with engineers and artists discussing technical details, at a time when electronic music was almost solely the realm of the technology-minded.

Click to read on Cyndustries.com

In the midst of his interview, there’s an aside which predicted our present. better than he could have imagined. Rosenboom writes:

If you extract a lateral and vertical pressure curve from the action of touching a sensor and think of it as a kinesthetic input to the system, then you can use routines that will take advantage of the fact that the forms of touch that are associated with types of expression are relatively constant over a wide range of humanity.

What he’s talking about here is the seed of all touch screens, electronic drum pads, tablets, and countless other touch-sensitive technology. His wording also implies a connection between emotion and touch, something scientists have confirmed recently about the way we type. He also advocates for algorithmic learning from such devices:

The idea is to use a pattern recognition routine that teaches itself. As you play it more it gets increasingly used to your feel. So if you can define the action of touching a sensor with a particular gestural form as an arbitrarily programmable stimulus to the system, then you have an increased range of stimuli recognizable by the system from touching the same sensor.

Rosenboom was convinced that neurofeedback was the way forward for electronic instrument design. But he didn’t just talk about using neurofeedback to produce music — with the limited technology of the time, he found a way to do it himself. In 1977, he performed a piece called “On Being Invisible” in Toronto, in which he used his own brain signals to influence a composition. The recording has a mystical, spacey quality, as small bells ting and the oscillators sound like they’re sucking you up into a mothership.

Now, with our much expanded technological prowess, the possibilities for an interplay between neurological feedback and the arts are virtually limitless. Musician Chris Chafe and scientist Josef Parvizi told New Scientist this year about their work creating a “Brain Stethoscope,” which both produces music from brain signals and inputs those sounds directly back into the part of the brain which interprets them.

Humans have great auditory acuity when it comes to comparing slightly mismatched things. We’re taking inaudible brain signals and making them audible through sonification. Then we feed them to the areas in the brain that recognise patterns in music. The result is our Brain Stethoscope.

All brain activity can be described in musical terms. A flat-line brain signal produces a dull, computery-sounding singing voice. Normally, an electrode channel has a bit of a wiggle to it and you’ll hear it modulate the pitch up and down. It modulates at a slow enough rate that we hear it as something like a vibrato or a pitch inflection.

It’s not all that unfamiliar, because of the happy accident that the speed of those undulations is in the range of the speed of the inflections you get in music. For a lot of these things, the proof is in the hearing.

The team has even proven that their Brain Stethoscope can help neurologists detect seizures with 95% accuracy – hearing them is easier than seeing them.

Meanwhile, Professor Eduardo Miranda of Carnegie Melon has been working on transforming neurofeedback into classical music. Miranda has worked on many projects combining neurology and music, some including full orchestras. In one experiment, he had subjects wear an EEG device while focusing on one of several checkered squares. On the other side of the table, their brain waves would select passages of music, which a cellist played back to them. A similar experiment (minus the cellist) can be seen in the video below:

Rosenboom continues to experiment and compose today, both electronically and with analog instruments, teaching, and even writing experimental, generative operas. Far from being cynical at the development of his practice and medium, he told a friend that he welcomes these changes, and has great optimism for young artists who, thanks to the internet, have the nearly every musical tradition at their fingertips.

I relish the delicious challenges found today in building musical forms that invite active, imaginative participation from all citizens of the cosmos to explore and create with each other’s musical languages in coherent ways. Far from resulting in musical salmagundi, when handled properly, these processes can reveal enabling commonalities and potent tools for building on differences constructively. What we are learning today about honoring and balancing diversity with unity is changing profoundly the nature of musical composition and performance.