Our ability to read into the emotions of others through body language — sometimes more accurately than through their words — is one of those psychological phenomenons that makes humans awesome. But could a computer do it better than a flesh-and-blood psychiatrist?

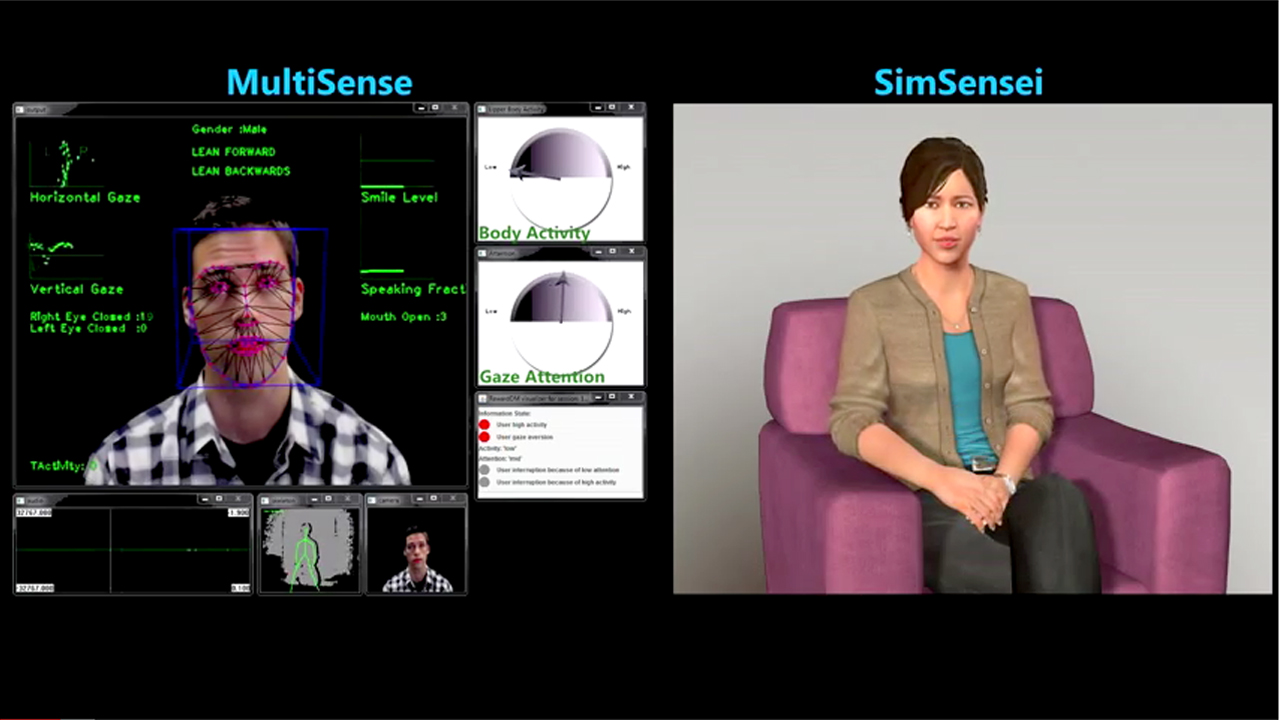

The digital program SimSensei tries: It monitors users’ subtle body language and facial expressions to help diagnose depression and similar disorders. While a digital avatar asks the patient questions typical of a talk therapy session (“When was the last time you felt really happy?” and so on), the program’s depth-sensing cameras and facial recognition technology are busy picking up on nonverbal cues, like an averted gaze or uncomfortable shifting, that a shrink might overlook.

While SimSensei is still undergoing development, this could be major for the psychology world. The current standard for depression diagnosis relies heavily on verbal exchange and written questionnaires, leaving lots of room for error when the patient isn’t able (or willing) to verbally express their feelings. SimSensei isn’t the only program of its kind that we should expect to see more of in the future– next month, doctors and developers from around the world will attend the Automatic Face and Gesture Recognition Conference in Shanghai to discuss work in the field.

And of course, there is absolutely nothing unsettling and sci-fi-dystopia-like about this at all. Nope. Not a thing.